Automated assessment and feedback

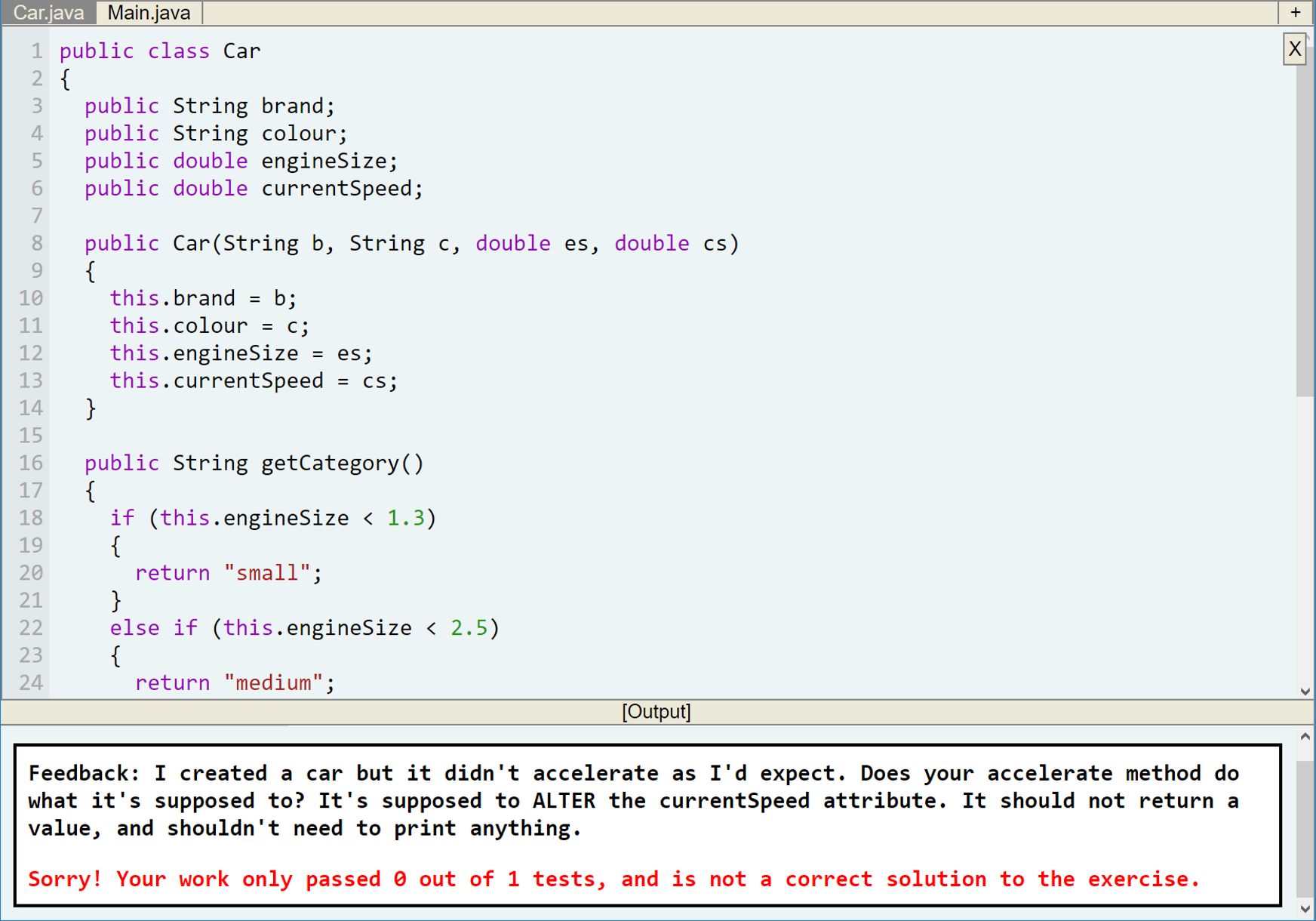

One of the most powerful features of NoobLab is its ability to give the student customised feedback on a programming activity. This can go far beyond a simple indication of correctness; a tutor can create a test that examines a students' code in depth and gives very specific feedback about precisely what is wrong.

All of NoobLab's supported languages allows a tutor to create "test cases" - small code fragments which are run against the student's submission. The most basic test case will simply encapsulate the criteria under which a medal can be awarded - perhaps the student has been asked to generate a specific output, or create a class with certain characteristics. However, a test case can also embed criteria for specific feedback messages. This allows a tutor to create a NoobLab exercise in which the environment itself takes on the role of the tutor, offering comments and advice on the students' code.

The contents of the feedback are entirely up to the tutor. A tutor might choose an approach where the environment never gives a complete solution within the feedback; instead, it might give hints designed to nudge a student in the right direction upon observing common errors in their code. Or, instead, perhaps an exercise would simply tell the student that their answer is wrong until a given threshold of attempts, at which point it gives them the complete solution to the exercise with appropriate explanation. Both approaches have their pedagogic place; the NoobLab environment is flexible enough to let the tutor choose which is right for their context.